Tutorials¶

JBConnect Hook Tutorial¶

api/services/localCommonService.js is a workflow processing Job Service that can be used to execute general workflows scripts.

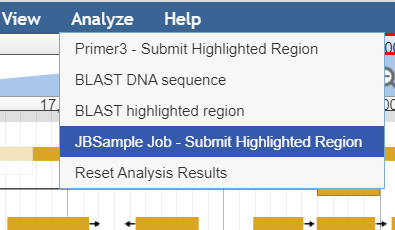

In this example, we present demo analysis hook (demo-jbconnect-hook). We show how to create a client-side JBrowse plugin that integrates with JBrowse,

adding a menu item under Analyze menu.

It’s a fully functional demo module that has a server-side execution shell script and performs some arbitrary processing. The example also demonstrates the client-side plugin collects user data in its submit dialog box and passes it to be used by the execution script.

The demo hook described in this section can be found here: <https://github.com/GMOD/demo-jbconnect-hook`_

JBCdemo JBrowse Plugin¶

This section describes a complete JBConnect installable hook.

The directory layout of a hook project is as such:

JBConnect project

├── api Standard sails API layout, models, controllers, etc.

│ └── hook

│ └── index.js hook index

├── bin Utilities

│ ├── jbutil-ext.js jbutil command extensions

│ └── postinstall.js package post installation

├── config Configuration files.

│ └── globals.js global config file for module

├── plugins Client-side Plugins

│ └── JBCdemo Demo client plugin

├── package.json Node package description

└── workflows Workflows directory

├── demo-job.demo.wf.sh Workflow script

└── demo-job.TrackTemplate.json TrackTemplate

api/hook/index.js¶

The main purpose of this file is to facilitate merging the configurations, models and controllers with the main JBConnect, making them available globally.

Extending jbtuil command¶

jbutil-ext.js provides a means for the hook to extend the jbutil command.

This is further described in `https://jbconnect.readthedocs.io/en/latest/configuration.html#extending-jbutil`_

bin/postinstall.js¶

This performs the important roll of copying the workflows in the hook project into the workflows directory of JBConnect.

It can also be use to perform other post-install setup.

globals.js & workflowFilter¶

globals.js are merged with globals.js in JBConnect.

For the demo the workflowFitler applies to the get_workflow enumeration call

In main.js of the plugin the following structure is defined.

// analyze menu structure

browser.jbconnect.analyzeMenus.demo = {

title: 'Demo Analysis',

module: 'demo',

init:initMenu,

contents:dialogContent,

process:processInput

};

Note that the module, defined as ‘demo’ here, is used by get_workflows call to filter the available workflows for a particular plugin. The definition in workflowFilter for ‘demo’ describes the filter. Only files that contain ‘.demo.wf’ will be returned by get_workflows.

module.exports.globals = {

jbrowse: {

workflowFilter: {

demo: {filter: '.demo.wf'},

},

...

}

};

In the file constructor of main.js, we find:

// analyze menu structure

browser.jbconnect.analyzeMenus.demo = {

title: 'Demo Analysis',

module: 'demo',

init:initMenu,

contents:dialogContent,

process:processInput

};

The source can be found here: `https://github.com/GMOD/demo-jbconnect-hook/blob/master/plugins/JBCdemo/js/main.js`_

where,

titleis the title of the dialog box that is launched from the Analyze Menu.moduleis the module that is module name. Coincides with module name used in `Configuration of sample workflow script`_initis the function that initializes the selection items in the Analyze Menu for the module.contentsis a function that builds the contents of the dialog box. This can be used to collect custom data prior to submitting.processis a function that collects the custom data from the fields created bycontentsto pass through the submit function.

In our example, the initMenu() does the setup of the Analyze menu item and when the item is selected by the user, it detects if a region has been

highlighted. This is a pretty common thing that is check by nearly all our processing modules. If the region is not highlighted,

We show an instructional dialog box instructing the user to highlight a region using JBrowse’s highlight feature.

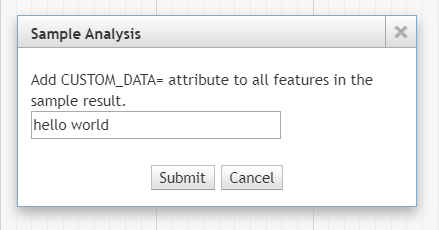

In dialogContent(), we render an additional field in the submit dialog box for CUSTOM_DATA. The user can type any value in the input box.

Upon submitting the job, the demonstration shows how data is passed from the user end to the execution script.

processInput() is called when the user clicks submit. Here we show the custom input field data are cellected and we show how to pass the

field data to the system and ultimately submitted to JBConnect.

Again, processing begins through localCommonService.js, the thing that takes control of the job and launches the workflow script that was selected by the user.

Note, the user will not see the workflow selection box unless there are more than one workflow. In our case, there is only one workflow script, so

it will automatically be selected by the client plugin code.

demo-job.demo.wf.js Worflow Script¶

The workflow script resides in the workflow directory. In this example sample.samp.wf.js is a very simple script that copies sample.gff3 to the target directory; in demonstrating the passing of data from the client side to the server side script, it extracts the CUSTOM_DATA field that was captured by the JBSample plugin and appends the value to every feature of sample.gff3.

# cmd <id> <jobid> <jobdata> <tmpdir> <outdir>

echo "> my.sample.wf.sh " $0 $1 $2 $3 $4 $5

# copy sample.gff3 to target dir

cp ./bin/sample.gff3 "$5/$2.gff3"

# extract value of CUSTOM_DATA

MYVALUE=$(awk -v k=CUSTOM_DATA -F: '/{|}/{next}{gsub(/^ +|,$/,"");gsub(/"/,"");if($1==k)print $2}' $3)

# add CUSTOM_DATA=MYVALUE as attribute to all features

sed -e "s/$/;CUSTOM_DATA=$MYVALUE/" -i "$5/$2.gff3"

Note the 5 parameters that are passed to the command by localCommonService.

- $0 is the script path (ie: “/home/theuser/jbconnect/workflows/sample.samp.wf.sh”)

- $1 <id> the job id (ie: “32”)

- $2 <jobid> the job name (ie: “32-sample”)

- $3 <jobdata> path of the job data file (ie: “/home/theuser/jbconnect/tmp/32-sample-jobdata.json”)

- $4 <tmpdir> the directory where temporary or intermediate files might be placed.

- $5 <outdir> is the target directory where result files (like gff3 files) might be placed.

The full command looks something like this:

/home/theuser/jbconnect/workflows/sample.samp.wf.sh 32 32-sample

/home/theuser/jbconnect/tmp/32-sample-jobdata.json

/home/theuser/jbconnect/tmp /home/theuser/jb1151/sample_data/json/volvox/sample

localCommonService expects to see a file <outdir>/<jobid>.gff3. So, the script creates this result file in the target directory based on the given

input parameters of the script. This is just the way localCommonService works. If the application requires other result files, a another Job Service would need to be

created. (see Creating a Stand-Alone Job Service for local workflow processing)

The script can be found under the workflows dir, here

Configuration of localCommonService¶

The configuration is required to enable the system to recognize that the Job Service exists.

services: {

'localCommonService': {enable: true, name: 'localCommonService', type: 'workflow', alias:'workflow'}

},

Creating a Stand-Alone Job Service for local workflow processing¶

This tutorial demonstrates how to create a job service that can be executed by the JBlast Plugin.

The source code for the tutorial can be found here

Job Runner functions¶

The function map defines the REST APIs that the job service supports.

In the function map (fmap), get_workflow function is minimally require from the Process BLAST dialog.

get_hit_details is not required since we don’t actaully do a blast operation in the example.

module.exports = {

fmap: {

get_workflows: 'get'

},

(required by Job Service)

Provides opportunity to initialize the Job Service module.

init(params,cb) {

return cb();

},

(required by Job Runner Service)

Provides mechanism to validate parameters given by the job queuer. Since our example job is submitted by JBlast, we extect to see a region parameter.

validateParams(params) {

if (typeof params.region === 'undefined') return "region not undefined";

return 0; // success

},

(required by Job Runner Service)

Job service generate readable name for the job that will appear in the job queue

generateName(params) {

return "sample job";

},

(required by JBClient, not required for Job Services in general)

Return a list of available available options. This is used to populate the Plugin’s Workflow. This should minimally return at least one item for JBlast client to work properly. Here, we are just passing a dummy list, which will be ignored by the rest of the example.

get_workflows (req, res) {

wflist = [

{

id: "something",

name: "sample do nothing job",

script: "something",

path: "./"

}

];

res.ok(wflist);

},

(required by Job Runner Service)

beginProcessing() is called by the job execution engine to begin processing.

The kJob parameter is a reference to the Kue job.

beginProcessing(kJob) {

let thisb = this;

let nothingName = "sample nothing ";

kJob.data.count = 10; // 10 seconds of nothing

let f1 = setInterval(function() {

if (kJob.data.count===0) {

clearInterval(f1);

thisb._postProcess(kJob);

}

// update the job text

kJob.data.name = nothingName+kJob.data.count--;

kJob.update(function() {});

},1000);

},

// (not required)

// After the job completes, we do some processing in postDoNothing() and then call

// addToTrackList to insert a new track into JBrowse

_postProcess(kJob) {

// insert track into trackList.json

this.postDoNothing(kJob,function(newTrackJson) {

postAction.addToTrackList(kJob,newTrackJson);

});

},

// (not required)

// here, we do some arbitrary post prosessing.

// in this example, we are setting up a jbrowse track from a canned template.

postDoNothing(kJob,cb) {

let templateFile = approot+'/bin/nothingTrackTemplate.json';

let newTrackJson = [JSON.parse(fs.readFileSync(templateFile))];

let trackLabel = kJob.id+' sample job results';

newTrackJson[0].label = "SAMPLEJOB_"+kJob.id+Math.random();

newTrackJson[0].key = trackLabel;

kJob.data.track = newTrackJson[0];

kJob.update(function() {});

cb(newTrackJson);

}

Note that queue data can be changed with the following:

kJob.data.name = nothingName+kJob.data.count--;

kJob.update(function() {});

Configuration¶

To enable: edit jbconnect.config.js add the sampleJobService line under services and disable the other services.

module.exports = {

jbrowse: {

services: {

'sampleJobService': {enable: true, name: 'sampleJobService', type: 'workflow'}, <====

'localBlastService': {enable: false, name: 'localBlastService', type: 'workflow', alias: "jblast"},

'galaxyBlastService': {enable: false, name: 'galaxyBlastService', type: 'workflow', alias: "jblast"}

},

}

};

Monitoring processing¶

The job runner is responsible for monitoring the state of any potential lengthy analysis opertion. If the job runner service is intended to perform some lengthy analysis, there would have to be some mechanism to detect the completion of the operation.

Completion processing¶

To complete a job, call one of the following.

(success) kJob.kDoneFn();

(fail) kJob.kDoneFn(new Error("failed because something"));

This will change the status of the job to either completed or error.

In our example, the helper library postAction handles the completion:

postAction.addToTrackList(kJob,newTrackJson);

Upon calling kJob.kDoneFn(), the module is required to perform any necessary cleanup.